Building from 0 to 1 with AI: Lessons from 15,000 Lines of Code

Four weeks ago, I set out on a journey to understand the power of AI-driven development. It’s hard to ignore the bold claims being made by AI evangelists, like this one from Jensen Huang (Nvidia CEO):

“Everybody in the world is now a programmer. This is the miracle of artificial intelligence.”

That quote piqued my curiosity: just how good is AI at writing code? Let’s find out.

Writing 15,000 lines of code in under 10 hours

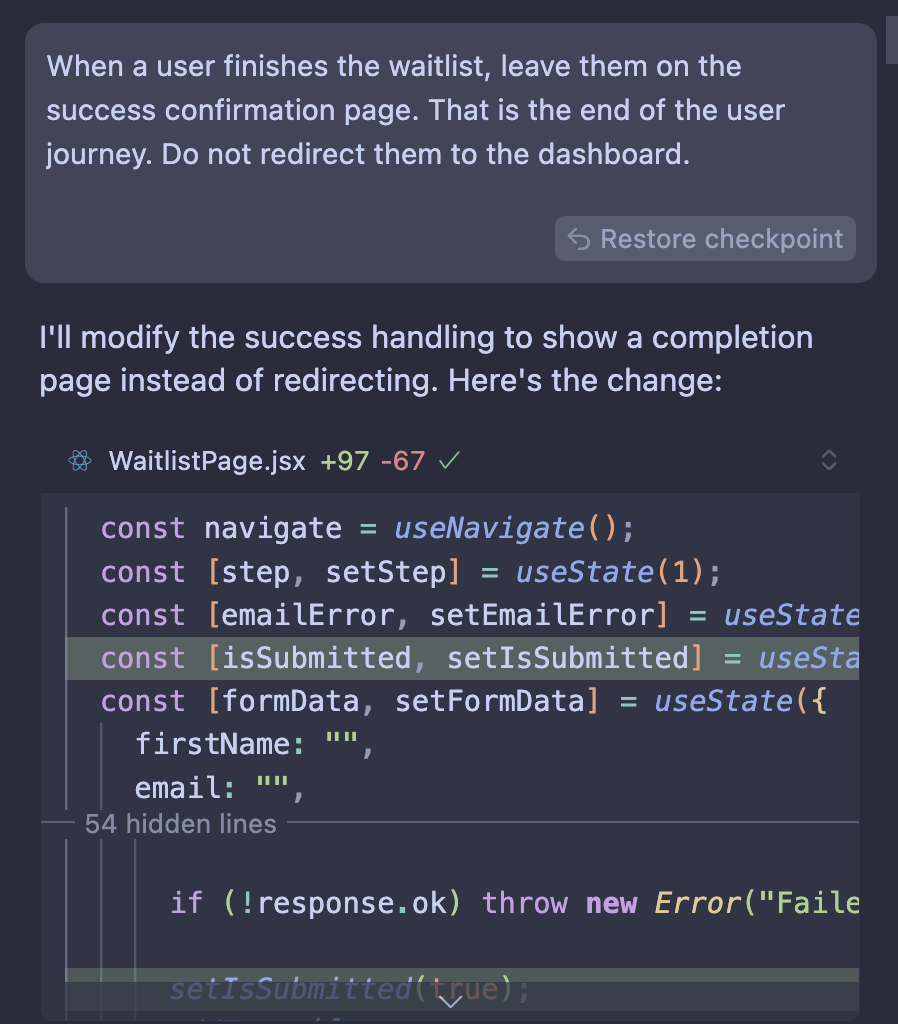

With a side project idea in mind, I decided to build a full-stack app with one twist: I’d let AI take the driver’s seat. Instead of writing code by hand, I focused on prompting and reviewing output from large language models (LLMs) like Claude 3.5 Sonnet and OpenAI’s GPT-4o.

For the experiment, I used Cursor, a VS Code–based editor designed for AI-first development. Cursor adds a sidebar for interacting with AI agents, like this:

Using it, I rapidly prototyped and built a FastAPI backend with endpoints, database operations, and authentication—plus a React frontend with a custom component library, routing, and API integration. In just a few short hours, I had a functional, polished app with over 15,000 lines of code.

Comparing model performance

I tested three models during this build:

- Claude 3.5 Sonnet

- OpenAI GPT-4o

- OpenAI GPT-4o-mini

Claude 3.5 Sonnet handled about 95% of the work. In my experience, it significantly outperformed the others in both quality and responsiveness. Sonnet just gets what you’re trying to do, whereas GPT-4o and 4o-mini often needed multiple rounds of refinement to land the right solution.

That said, GPT-4o shined at making sweeping structural changes. Early in the project, the React app was descending into chaos: components, pages, and utilities were all dumped into src/, and the repo was clearly outgrowing its flat structure. So I decided to test its limits and gave the AI one of the riskiest prompts of the project:

“Let’s refactor the frontend.”

Armed with detailed guidance and a desired folder structure, GPT-4o reorganised files, extracted reusable components, and applied DRY principles—without breaking anything.

But seriously: don’t try this on a production codebase. The risk far outweighs the reward.

What worked well

After 250+ prompts, one thing became crystal clear: success depends on writing precise, exam-style prompts. Here’s an example:

“The search bar loses focus after typing the first letter; after that, all subsequent letters hold focus as expected. Fix this search bar so that it does not lose focus after typing the first letter.”

Compare that to a vague prompt like “That didn’t work”, which will almost always underperform. You’ll get much better results with:

“The applied change does not correctly hold focus after typing the first letter. Take a step back and reconsider your approach to fix the search bar so that it does not lose focus after typing the first letter.”

Another trick I found useful was prompting self-reflection after completing a feature. Simply asking:

“Knowing what you now know, would you do anything differently to implement this?”

…often led to significant improvements in simplicity and readability—mirroring the kind of reflection engineers do naturally after a big piece of work.

What’s the catch?

As powerful as these tools are, LLMs still make a lot of mistakes. Without active supervision, this project could’ve crashed and burned fast. Here are just a few things the agents tried:

- Reverting features they had previously built

- Adding nonexistent API parameters to HTTP requests

- Changing unrelated UI components while editing nearby code

- Attempting to delete the entire frontend app (yes, it tried to delete the

web/directory of my monorepo)

As the codebase grew, these issues multiplied. Simple tweaks introduced regressions more frequently, and longer task chains led to cascading errors. With careful review and solid prompt hygiene, I was able to sidestep major regressions and keep the project on track, but the decrease in efficiency was certainly notable.

Final thoughts

These LLMs are incredibly powerful, but they require an experienced engineer at the helm. I don’t necessarily agree with the idea that “everybody is now a programmer”—at least not if you’re trying to build something scalable and robust.

But if you are a software engineer with a solid foundation—and you know how to validate what AI is generating—then tools like Claude and GPT-4o can supercharge your ability to go from 0 to 1. From there, AI shines not as the driver, but as a copilot—amplifying your momentum, not steering the ship.